Knowledge Graph Generation

What is Graph Representation?

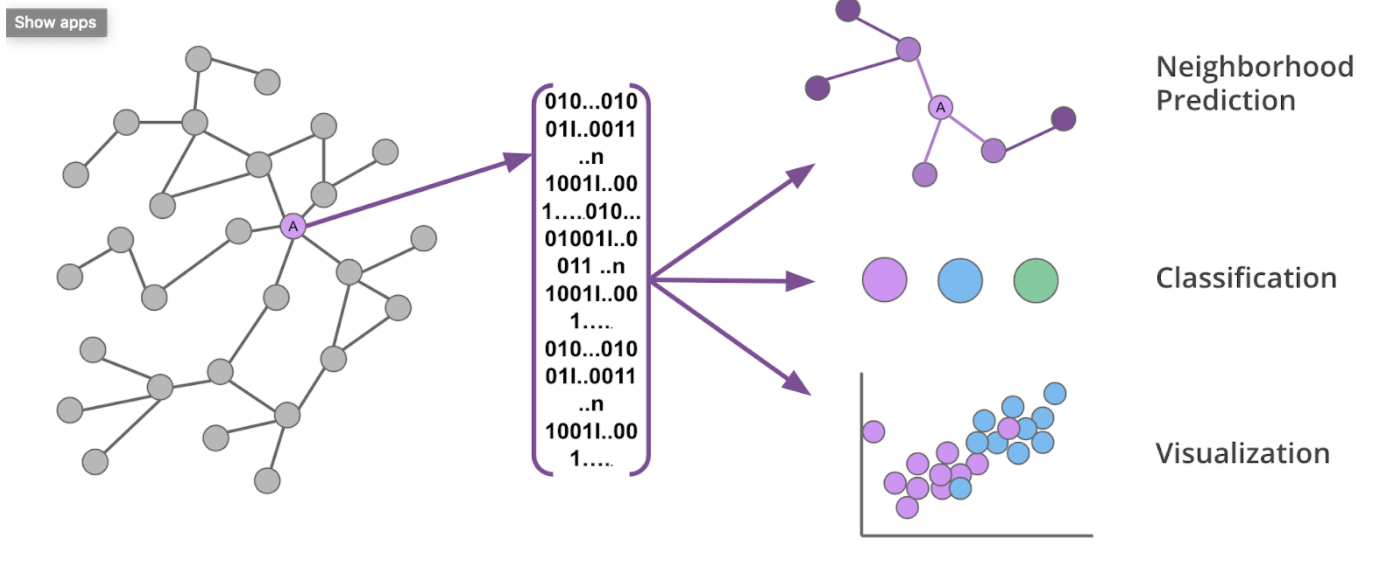

In data science and machine learning, graphs are a powerful tool for showing relationships between different entities. Unlike traditional methods that treat each data point as a separate item, graph representations capture the connections between them, offering a more complete and insightful view.

A graph consists of nodes (or vertices) and edges. Nodes represent entities such as people, objects, or concepts, while edges denote the relationships between these entities.

By leveraging graph representation, we can encode not only the individual properties of entities but also the rich, contextual relationships between them. This allows us to move beyond simplistic, point-in-space embeddings and instead model data as a network of interconnected entities.

How can Knowledge Graphs help Embeddings and LLMs?

Knowledge Graphs (KGs) extend the idea of graph representation by organizing information into a structured, relational format. KGs are particularly useful in capturing the semantic relationships between different entities, which is invaluable for tasks like information retrieval, recommendations, and language understanding.

When we apply Knowledge Graph embeddings, we transform the nodes and edges of a KG into a vector space, preserving the relational structure and semantic meaning. These embeddings can be used to enhance traditional machine learning models, including Large Language Models (LLMs).

Benefits of Knowledge Graphs for Embeddings and LLMs:

Contextual Understanding: KGs allow embeddings to be context-aware by preserving the relationships between entities. This leads to more accurate and meaningful representations, particularly when dealing with multi-modal data.

Enhanced Information Retrieval: By incorporating KGs, embeddings can improve search results by understanding the relationships between query terms and the data. This is especially useful in domains like e-commerce, where users might search for products using various descriptions.

Improved Recommendations: KGs can help in generating more relevant recommendations by understanding user preferences and the relationships between different items. For instance, if a user frequently interacts with content about renewable energy, the system might recommend related articles on solar power, energy storage, and environmental policy.

Better Large Language Models: LLMs, when integrated with KG embeddings, can generate more contextually accurate and semantically rich responses. This is particularly important in applications like chatbots and virtual assistants, where understanding the user's intent and providing relevant answers is crucial.

Methods used by us for Text KG

Dataset used - The dataset includes 1000 subset rows of COYO 700M text-image pair dataset which can be availed from kaggle - coyo-1k-reduced

Triplet Extractions - Relational triplets form the backbone of any knowledge graph. A triplet consists of three components: the subject (head), the predicate (relation), and the object (tail). We use the REBEL model that processes each caption in the dataset and generates these triplets. For each image caption, multiple triplets can be extracted, capturing various relationships between the entities described in the text.

REBEL is a seq2seq model based on BART that performs end-to-end relation extraction for more than 200 different relation types.The paper can be found here

Methods used for Visualization -

After extracting the triplets, the next step is to visualize the knowledge graph. Visualization helps in understanding the structure and relationships within the data. We used four different methods to visualize the KG:

a. Neo4j

Neo4j is a graph database that excels at managing and querying large-scale knowledge graphs. By importing the extracted triplets into Neo4j, we could visually explore the connections between entities. Neo4j’s powerful query language, Cypher, allows for complex querying and analysis, making it an ideal choice for deeper insights into the graph structure.

b. NetworkX

NetworkX is a Python library designed for the creation, manipulation, and study of complex networks. We utilized NetworkX to generate basic visual representations of the knowledge graph. This method is particularly useful for smaller datasets or when you need to quickly prototype and analyze the structure of your KG without the overhead of a database.

c. Plotly

Plotly is a versatile graphing library that supports interactive visualizations. For our KG, we used Plotly to create interactive, web-based visualizations that allow for dynamic exploration of the relationships between entities. The ability to zoom, pan, and interact with the graph nodes and edges provides an engaging way to explore the KG.

d. Graphviz

Graphviz is a robust tool for rendering graphical representations of data structures. It excels at generating static images of graphs, which are useful for documentation and presentations. We used Graphviz to produce clear and concise visualizations of the KG, highlighting the most important relationships extracted from the dataset.

Okay, what about the Image KG?

We followed 2 approaches to create Image Scene Graphs

Vision Language Model (VLMs)

Relationformer (refer to the paper- https://arxiv.org/abs/2203.10202)

How did we go about using VLMs for this task?

For this approach Vision-Language Model (VLM) LLava 13b, a multimodal large language model was used for text generation from image based on prompts given.

The model was then loaded on cuda with 4-bit quantization using bitsandbytes configuration.

When presented with an image, the model was prompted to "describe in detail the image and its objects."

The model output based on prompt was then used for Triplet extraction task.

[['The image features a group of people, including a boy and a girl, sitting on a bench'], [' They are accompanied by a white dog, which is sitting on the ground in front of them'], [" The scene appears to be a casual gathering, with the people and the dog enjoying each other's company"]]

Triplet Extraction with Babelscape REBEL-large (https://huggingface.co/Babelscape/rebel-large)

With the detailed textual description in hand, the next step involved transforming this unstructured information into a structured format. This was achieved using the REBEL model, a specialized tool for triplet extraction. The process involved identifying and extracting triplets in the form of head-relation-tail (H-R-T) structures. These triplets serve as the foundational building blocks for knowledge graphs, where the "head" represents an entity, the "relation" indicates the type of connection, and the "tail" signifies another entity linked to the head.

Constructing the Knowledge Graph

Once the triplets were extracted, the task of constructing the knowledge graph began. Using visualization libraries like NetworkX and Plotly, the extracted triplets were organized into a coherent and visually interpretable knowledge graph.

As can be seen, the knowledge graph generated is a Hit & Miss , not everything is perfect here but most things do make sense.

Relationformer

Along with using VLMs we looked into other preexisting frameworks for scene graph generation. W e used scene graph for it's acceptable results and comparatively well documented code. The architectural overview of this framework is given in the figure below. It used an encoder decoder based architecture where the image is encoded to be used for decoder cross attention.

The decoder has N [obj](object) tokens and one [rel](relation) token.

The object tokens and relation tokens are there to encode the information for object detection and relation predictions respectively when they are output by the decoder.

In order to run inference using this, we've made an inference code and detailed the setup that can be used to replicate our results.

Some things you should be aware of while running inference/training:

The framework was trained on visual genome dataset(https://homes.cs.washington.edu/~ranjay/visualgenome/index.html) having 150 object categories and 50 relation categories. We organized it and make it available here. You can use this for training

Also to fit the model on the available GPUs for training, we have reduced the model size, please refer to the config file also in the repository.

We also give our pretrained weights in the same inference notebook.

Results

Here we list the results we got after training it for five epochs. We're plotting just the highest score object detections and relations. We see that the model isn't converged from the object detections and the relation predictions in the graph as well. This may be because we've changed the model architecture without changing the underlying hyperparameters or it just may be the lack of enough training.

Last updated